Digital Object Management¶

Import and Publish Procedures¶

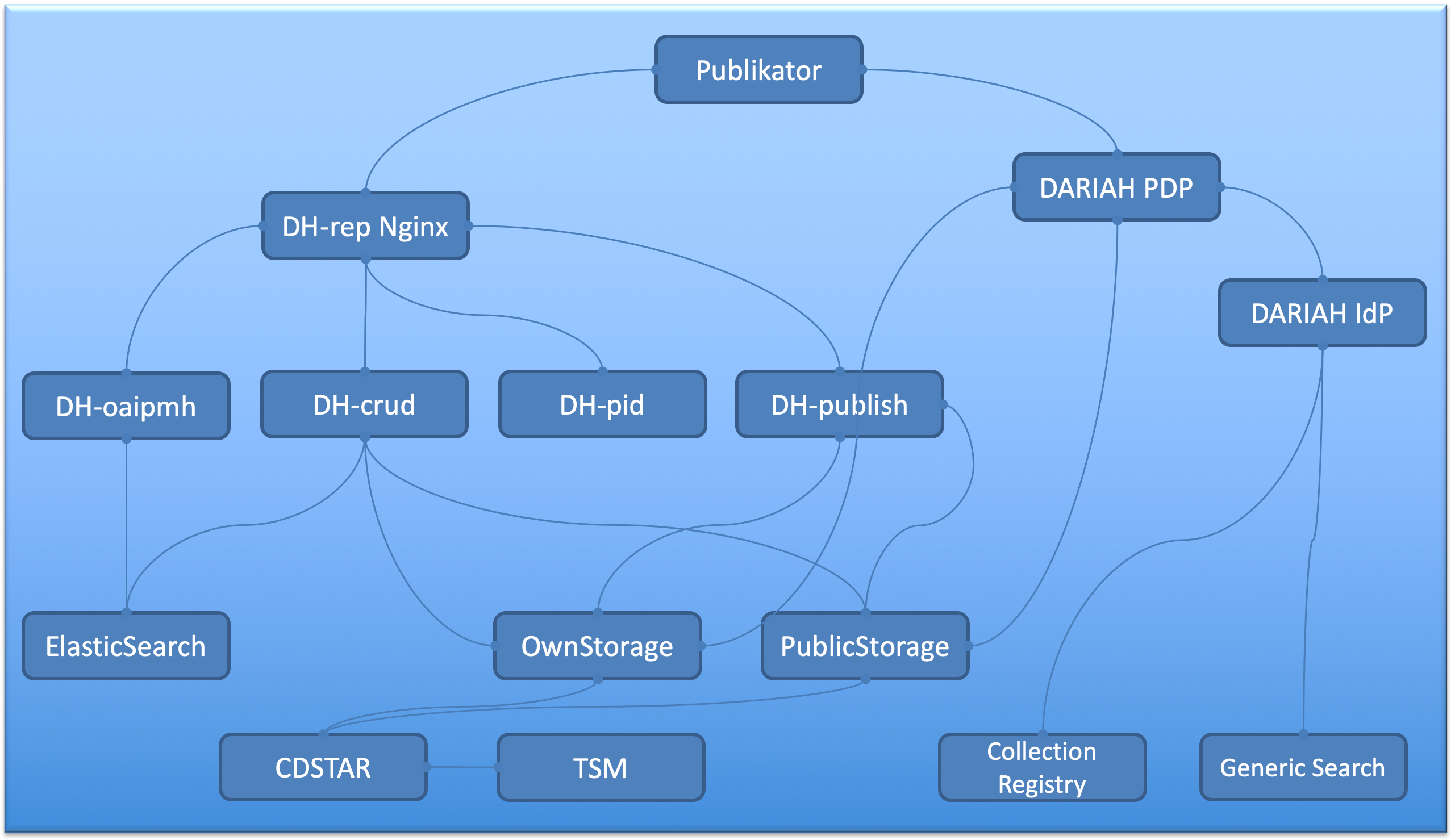

This section gives an overview about the main technologies and procedures used for an appropriate data management. Import and publish workflows are described and related to the repository architecture of the DARIAH-DE Repository.

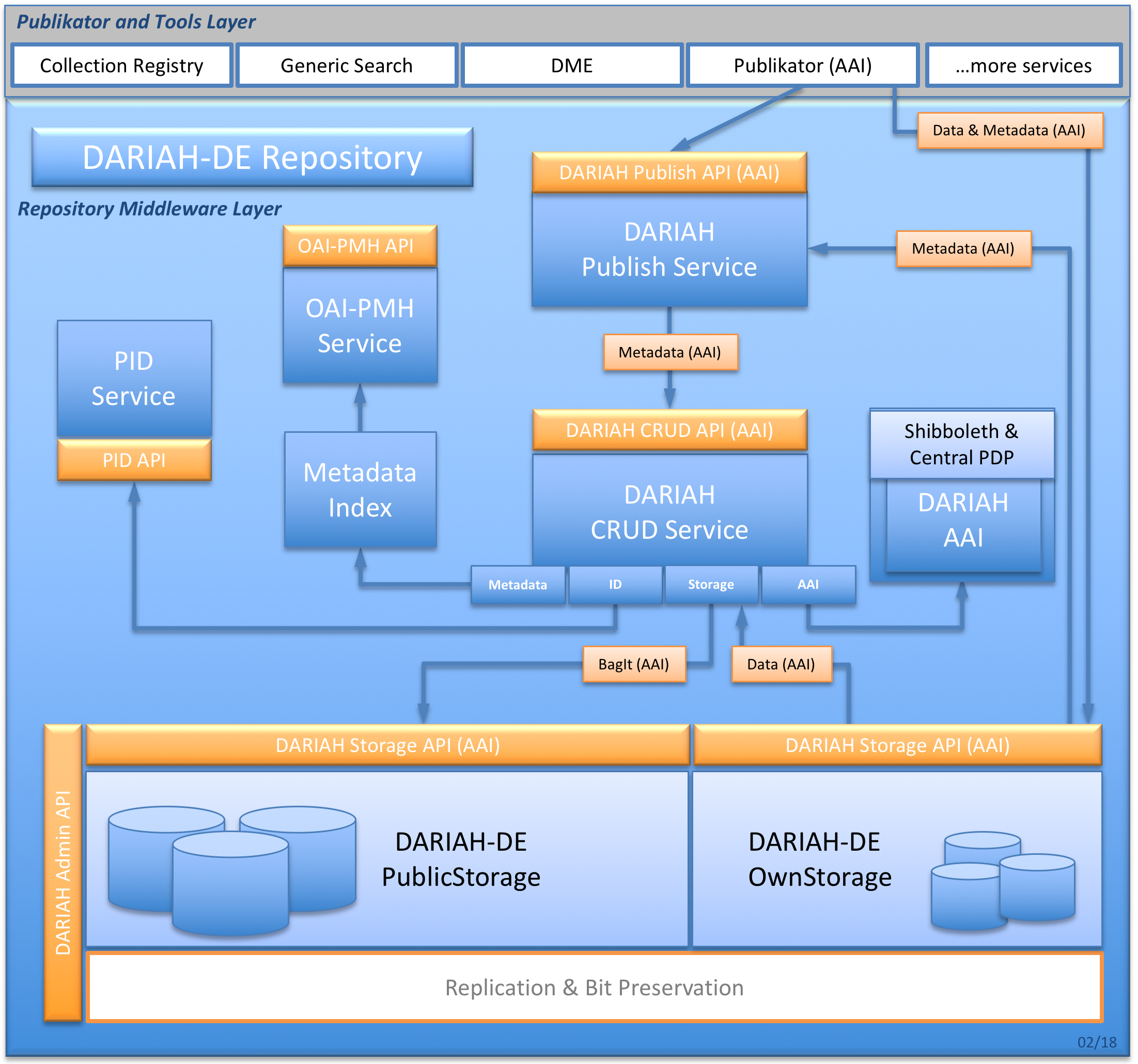

Fig. 1: The DARIAH-DE Repository Architecture

DARIAH-DE operates the DARIAH-DE Repository as a digital long-term archive for humanities and cultural studies research data. The DARIAH-DE Repository is a central component of the DARIAH-DE Research Data Federation Architecture, which aggregates various services and applications and can be used comfortably by DARIAH users.

The DARIAH-DE Repository allows you to save your research data in a sustainable and secure way, to provide it with metadata and to publish it. Your collection as well as each individual file is available in the DARIAH-DE Repository in the long term and gets a unique and permanently valid Digital Object Identifier (DOI) with which your data can be permanently referenced and be cited. In addition, all collections are added to the Repository Collection Registry (CR), which are then also found in the Repository Search and in the DARIAH-DE Generic Search (GS).

The dynamic part of the DARIAH-DE storage (OwnStorage) is mainly used by the DARIAH-DE Publikator to store data that is currently in the publication process. This data is secured by rights management via the DARIAH-DE Policy Decision Point (PDP) – using Role Based Access Control (RBAC) and Lightweight Directory Access Protocol (LDAP) – so that only the users that own and uploaded the data can access the data and later publish it.

Data that has been published is moved to the static part of the DARIAH-DE storage (PublicStorage), where it is available for everyone, and it

- will be stored safely in the repository,

- will include a Digital Object Identifier (the collection itself and all the files).

The data then

- can be permanently referred to and be cited,

- is publicly accessible,

- is described as a collection in the Collection Registry, and

- is searchable in the Repository Search as well as in the DARIAH-DE Generic Search.

OwnStorage and PublicStorage both are accessed via the DARIAH-DE Storage API and the DARIAH-DE PDP (Policy Decision Point).

To publish data in the DARIAH-DE Repository, it has to be either imported using the DARIAH-DE Publikator or published directly into the DARIAH-DE Repository using the Import API of the DH-publish service.

For more information about importing and publishing – or Publikator usage in general – please refer to the Publikator user guide and the DARIAH-DE Repository documentation.

Import and Publish Workflows¶

Publikator GUI Import¶

You can import data into the DARIAH-DE Repository using the Publikator. At first you upload your data to the DARIAH-DE OwnStorage and tag it with collection and item metadata. The final step then is the publication process, various services are involved:

Authenticate and authorize (DARIAH AAI and DARIAH-DE PDP)

Upload data (OwnStorage) and tag metadata (Publikator)

- Publish the data (Publish Service)

- Reads all the collection RDF models created by Publikator from OwnStorage (module: ReadCollectionModels)

- Checks all the given collections for data and metadata compliance (module: CheckCollections)

- Gets ePIC Handle PIDs for every object (module: GetPids)

- Registers Datacite DOIs via PID Service, map DC metadata to Datacite metadata (module: RegisterDois)

- Creates collections and subcollection objects (module: CreateCollections)

- Processes various metadata such as creating administrative and technical metadata (module: MetadataProcessor)

- Submits files via CRUD Service to the PublicStorage, including feeding the metadata Index and OAI-PMH data provider (module: SubmitFiles)

- Adds the collection to the Collection Registry, so that the collection can be found in the Repository Search (module: NotifyCollectionRegistry)

- PID metadata is being updated (module: UpdatePidMetadata)

- The overall RDF model is stored for later use (module: StoreOverallRDF)

You then optionally can edit the already published collection description in the CR

DH-publish Service API¶

In principle the API import works alongside the GUI import, only that the user has to do all the tasks that Publikator is caring of programmatically:

- Getting an OAuth2 storage token via Publikator GUI

- Uploading all files to the DARIAH-DE OwnStorage using that token

- Map all filenames to the object IDs OwnStorage is returning

- Creating RDF files for every subcollection and a root RDF file according to DH-publish Documentation

- Sending the root RDF file to the DH-publish service to process the publishing process as stated in Publikator GUI Import (see 2).

DARIAH-DE Repository and the Open Archival Information System (OAIS)¶

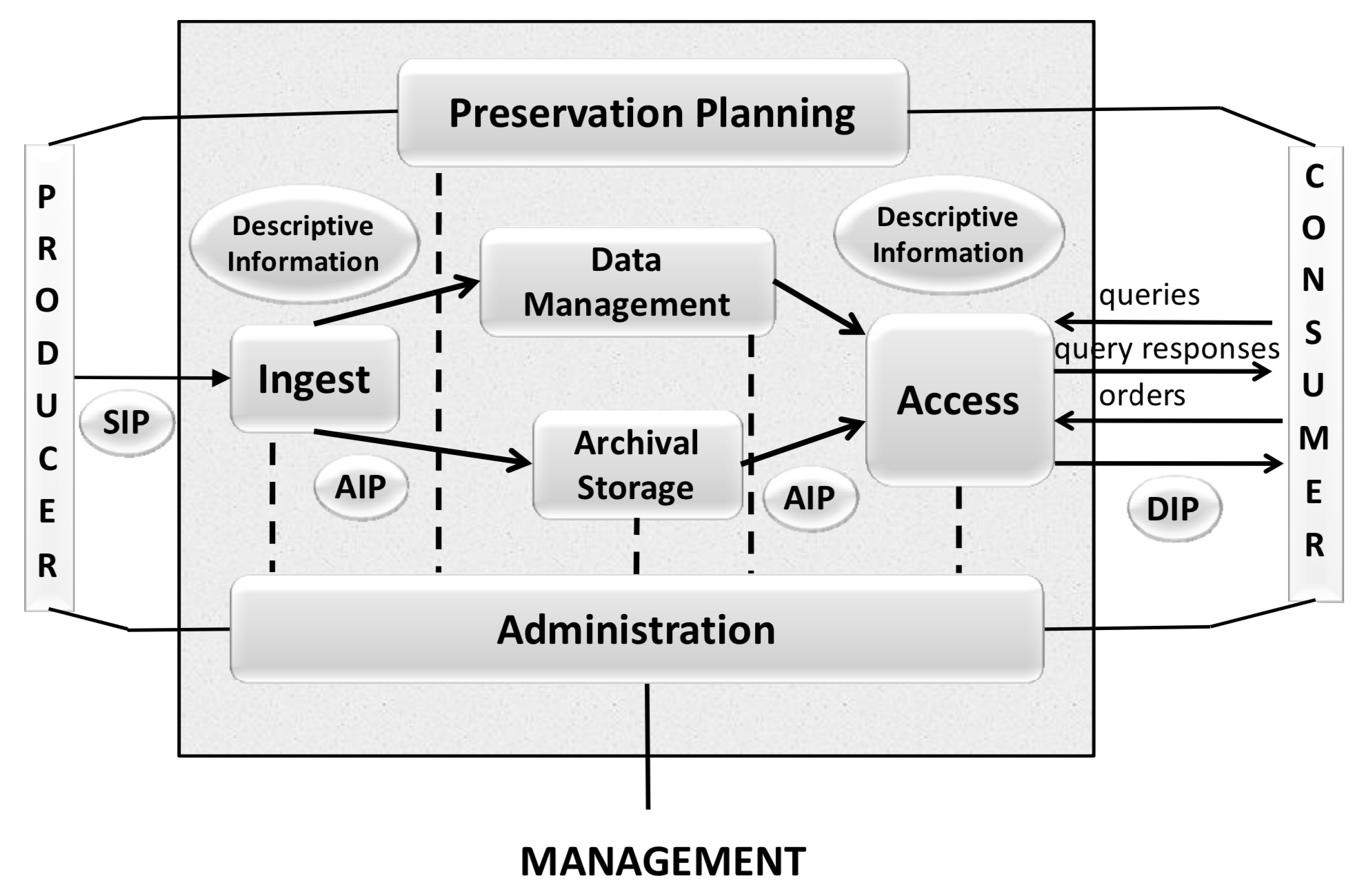

Fig. 2: OAIS Functional Entities (source: Recommendation for Space Data System Practices: REFERENCE MODEL FOR AN OPEN ARCHIVAL INFORMATION SYSTEM, p.4-1)

In fig. 2 the basic functions of an Open Archival Information Systems (OAIS) are on display plus the related DARIAH-DE Repository workflows and Services:

“The Ingest Functional Entity [...] provides the services and functions to accept Submission Information Packages (SIPs) from Producers [...] and prepare the contents for storage and management within the Archive. Ingest functions include receiving SIPs, performing quality assurance on SIPs, generating an Archival Information Package (AIP) which complies with the Archive’s data formatting and documentation standards, extracting Descriptive Information from the AIPs for inclusion in the Archive database, and coordinating updates to Archival Storage and Data Management.“ – Recommendation for Space Data System Practices: REFERENCE MODEL FOR AN OPEN ARCHIVAL INFORMATION SYSTEM, p.4-1

This would address the DH-crud and DH-publish services including metadata indexes that prepares and validates the data for save storing and also puts data and metadata into the core databases for indexing and retrieval, please compare fig. 1.

“The Archival Storage Functional Entity [...] provides the services and functions for the storage, maintenance and retrieval of AIPs. Archival Storage functions include receiving AIPs from Ingest and adding them to permanent storage, managing the storage hierarchy, refreshing the media on which Archive holdings are stored, performing routine and special error checking, providing disaster recovery capabilities, and providing AIPs to Access to fulfill orders.“ – Recommendation for Space Data System Practices: REFERENCE MODEL FOR AN OPEN ARCHIVAL INFORMATION SYSTEM, p.4-2

This addresses again DH-crud for retrieval and the underlying storage system, such as DARIAH-DE Own- and Public Storage including security checks and maintenance issues, please compare fig. 1.

“The Data Management Functional Entity [...] provides the services and functions for populating, maintaining, and accessing both Descriptive Information which identifies and documents Archive holdings and administrative data used to manage the Archive. Data Management functions include administering the Archive database functions [...], performing database updates [...] performing queries on the data management data to generate query responses, and producing reports from these query responses.“ – Recommendation for Space Data System Practices: REFERENCE MODEL FOR AN OPEN ARCHIVAL INFORMATION SYSTEM, p.4-2

Underlying storage and indexing databases are addressed here, such as DARIAH AAI, ElasticSearch, ePIC Handle PIDs, DOI identifiers, as well as the DARIAH-DE Generic Search, please compare fig. 1.

“The Administration Functional Entity [...] provides the services and functions for the overall operation of the Archive system. Administration functions include soliciting and negotiating submission agreements with Producers, auditing submissions to ensure that they meet Archive standards, and maintaining configuration management of system hardware and software. It also provides system engineering functions to monitor and improve Archive operations, and to inventory, report on, and migrate/update the contents of the Archive. It is also responsible for establishing and maintaining Archive standards and policies, providing customer support, and activating stored requests.“ – Recommendation for Space Data System Practices: REFERENCE MODEL FOR AN OPEN ARCHIVAL INFORMATION SYSTEM, p.4-2

Here DH-crud is addressed as well as all system maintenance and operation staff and services, such as operating system updates and DARIAH-DE Repository services monitoring, please compare fig. 1, as well as Organisational Infrastructure and Data Policies.

“The Preservation Planning Functional Entity [...] provides the services and functions for monitoring the environment of the OAIS, providing recommendations and preservation plans to ensure that the information stored in the OAIS remains accessible to, and understandable by, the Designated Community over the Long Term, even if the original computing environment becomes obsolete. Preservation Planning functions include evaluating the contents of the Archive and periodically recommending archival information updates, recommending the migration of current Archive holdings, developing recommendations for Archive standards and policies, providing periodic risk analysis reports, and monitoring changes in the technology environment and in the Designated Community’s service requirements and Knowledge Base. Preservation Planning also designs Information Package templates and provides design assistance and review to specialize these templates into SIPs and AIPs for specific submissions. Preservation Planning also develops detailed Migration plans, software prototypes and test plans to enable implementation of Administration migration goals.“ – Recommendation for Space Data System Practices: REFERENCE MODEL FOR AN OPEN ARCHIVAL INFORMATION SYSTEM, p.4-2

This entity is not yet implemented for automated use, such as format migration for diverse object formats. Nevertheless technical metadata are extracted from every single object for future use (DH-crud) and the retrieval of certain object formats and versions for migrations and other preservation actions to come, see Replication & Bit Preservation in fig. 1. Further adjustments and developments such as format migration will be considered for implementation by observing and analysing best practice of the designated community and taking into account their feedback.

“The Access Functional Entity [...] provides the services and functions that support Consumers in determining the existence, description, location and availability of information stored in the OAIS, and allowing Consumers to request and receive information products. Access functions include communicating with Consumers to receive requests, applying controls to limit access to specially protected information, coordinating the execution of requests to successful completion, generating responses [...] and delivering the responses to Consumers.“ – Recommendation for Space Data System Practices: REFERENCE MODEL FOR AN OPEN ARCHIVAL INFORMATION SYSTEM, p.4-2 p.

Here the OAI-PMH data provider, based on the index database, is in place to guarantee research of published objects using the Generic Search. Retrieval then is managed by Handle and DOI references that redirect to the object’s DH-crud landing pages.

DARIAH-DE Object AIP Integrity¶

A DARIAH-DE Repository Object consists of one Bagit File Packaging Format. Therein contained are all the related data and metadata concerning that object:

- the data file (as it)

- the descriptive metadata file (TTL)

- the administrative metadata file (TTL)

- the technical metadata file (XML)

This object package is imported using DH-crud and can be seen as the SIP (Submission Information Package) according to the Object Information Archiving System (OAIS), the same applies to a DIP (Dissemination Information Package): You can export the Bagit package via DH-crud API Documentation (click HERE for an example). The AIP (Archival Information Package) then is the data contained in the storage and databases after DH-crud import. DH-crud does

- store the data and all metadata file to the DARIAH-DE PublicStorage, and

- put metadata into the ElasticSearch database.

The object consistency is checked by the underlying storage system of the PublicStorage (see Storage System below).

DARIAH-DE Resolver and PIDs¶

Information concerning resolving and Persistent Identifiers you can find at the Resolving and Persistent Identifiers documentation page.

Longterm Preservation and Data Curation¶

Persistent Identifiers¶

The DARIAH-DE Repository provides Handle PIDs and Datacite DOIs. The Handle PIDs are created for the more administrative use, the Datacite DOIs are used for citation and referencing. More information concerning resolving and Persistent Identifiers you can find at the DARIAH-DE Repository Documentation: Resolving and Persistent Identifiers page.

Technical Metadata Extraction¶

Technical metadata will be created, stored and delivered for every published object. For this task the File Information Toolset (FITS) is used. The technical metadata then can be accessed for every object via Handle PID or directly via DH-crud (please see DH-crud API Documentation).

Longterm Preservation Archiving¶

There are plans to additionally put every published DARIAH-DE repository object into a LTP archive (a so called dark archive), where the next layer of preservation is provided: There will be more documented and certified storage procedures, and distributed storage locations.

Storage System¶

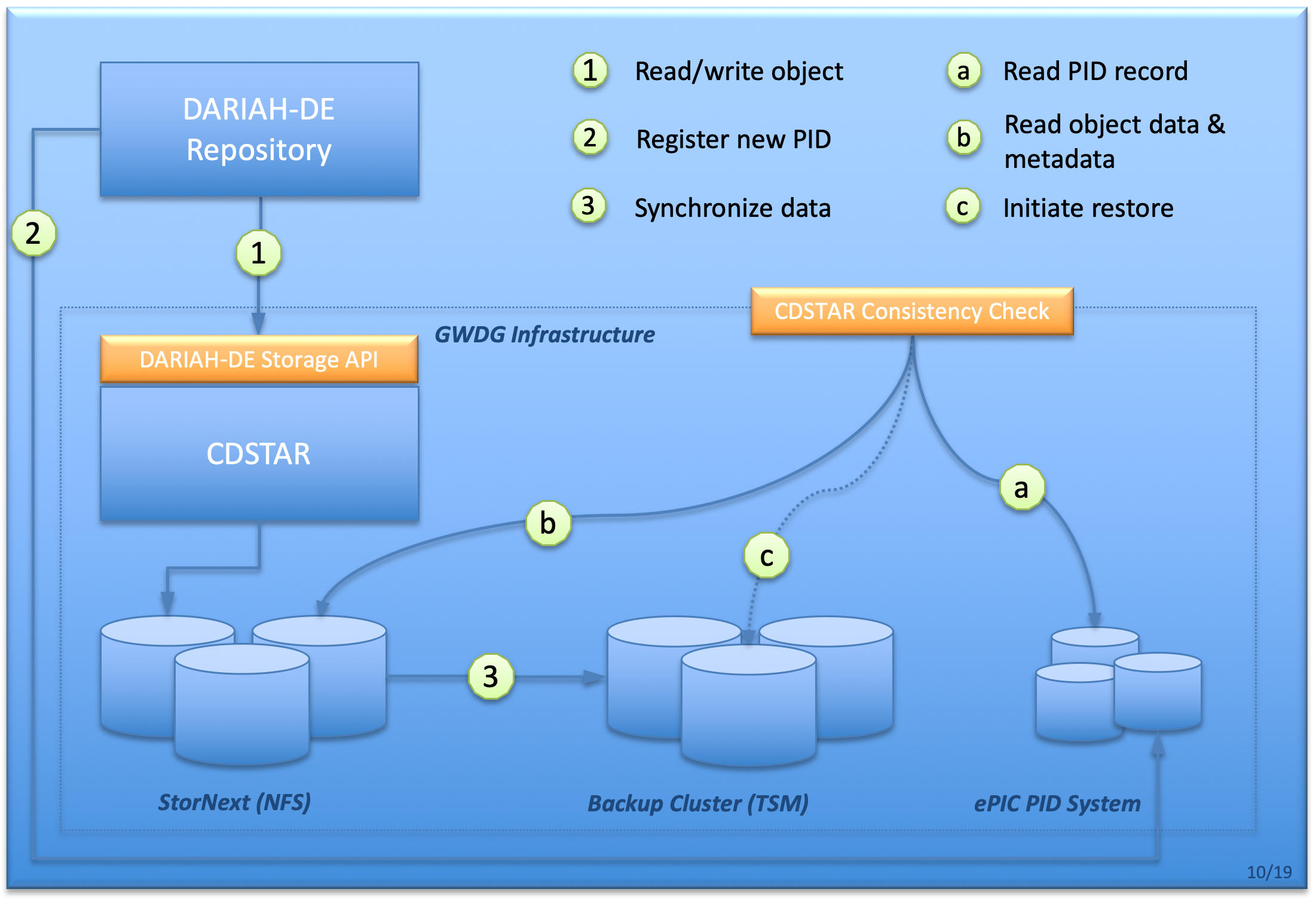

The storage of the DARIAH-DE Repository is realized as a network file system (NFS) which is technically provided by the computing centre of the University of Göttingen (GWDG). This network file system is based on the file service StorNext, which is one of the storage solutions of GWDG. Moreover, the StorNext file system relies on several hard drives which are organized as a RAID6 system. RAID6 ensures performance and provides a protection mechanism against storage media deterioration. Furthermore the whole repository is backed up using the IBM Spectrum Protect (ISP), which is a well established tool for data backup and recovery. Moreover, GWDG, as a computing centre, is using several sophisticated monitoring frameworks to check the status of its infrastructure. The following figure provides an overview of overall storage system including the DARIAH-DE Repository/CDSTAR Consistency Check for data integrity:

Fig. 3: Underlying Storage System Infrastructure

Backup Strategy and Data Recovery¶

As depicted in fig. 3 and already indicated previously, the data of the repository is backed up via TSM. For that, once a day, the TSM-Client is launched as a background process to compute a virtual folder/file structure, which represents the actual structure in the NFS of the repository. This virtual folder/file structure is sent to the TSM-Server, which in turn compares it with the structure at its storage medium (the backup storage medium). If it appears that there are differences in individual files or folders, only these modified files or folders are copied to the backup storage medium. Hence, the repository data is backed up incrementally. However, the old files or folders at the backup storage medium are not overwritten immediately by the new copies, instead they are marked as ‘inactive’ and additionally stored for 90 days. After 90 days inactive files are going to be deleted. Active files, hence, files which represent the current status of the repository, are never deleted. By means of this architecture, it is ensured that there are multiple copies of data and also the possibility to restore a previous repository state.

Data recovery provisions are very much case dependent and therefore processed manually. A restore can only be performed by the computing centre GWDG, which therefore has to be notified to accomplish that. The internal security plan contains all necessary information including contact details.

Data Integrity and Authenticity¶

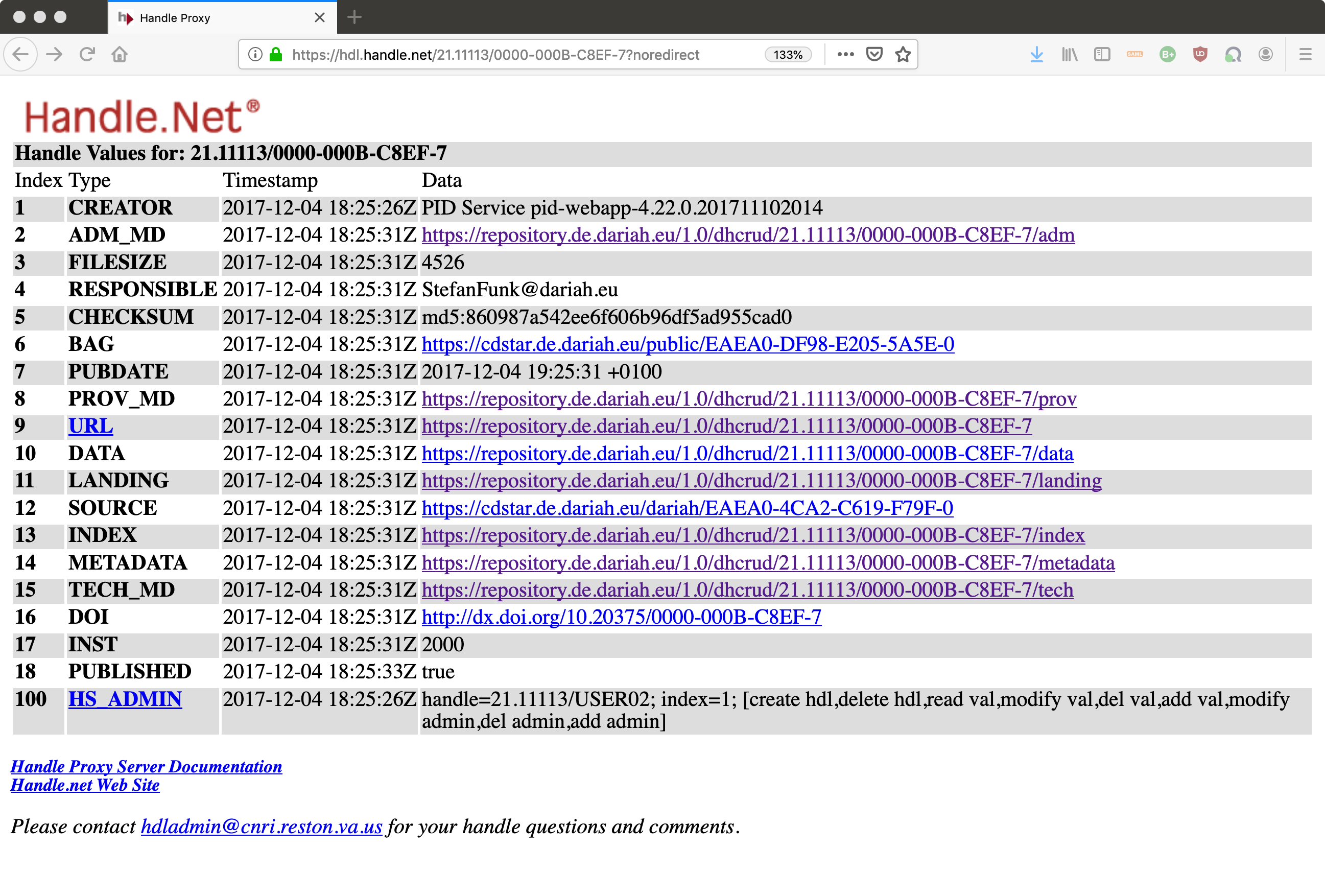

At ingest in the DARIAH-DE Repository – as a part of the publication workflow –, each object is assigned to two persistent identifiers (PIDs), an EPIC2 Handle and a Datacite DOI. The EPIC2 Handle PID is used for administrative issues, such as providing information on status (public flag), URL resolving, and additionally direct access to data, various metadata, and the complete Bagit bag (please see DARIAH-DE Repository Documentation: Resolving and Persistent Identifiers). The Datacite DOI is used for citing the objects and spreading them to the world, hence it is very well known in the research community and furthermore widely used.

An example Handle PID record (21.11113/0000-000B-C8EF-7) is depicted in the following figure:

Fig. 4: ePIC PID record of a DARIAH-DE Repository Object

Checksums are created for every object imported at ingest and stored in the object’s administrative metadata https://hdl.handle.net/21.11113/0000-000B-C8EF-7@adm or directly via DH-crud https://repository.de.dariah.eu/1.0/dhcrud/21.11113/0000-000B-C8EF-7/adm, see the following metadata excerpt:

@prefix premis: <http://www.loc.gov/premis/rdf/v1#> .

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> .

@prefix dcterms: <http://purl.org/dc/terms/> .

@prefix dariah: <http://de.dariah.eu/rdf/dataobjects/terms/> .

<http://hdl.handle.net/21.11113/0000-000B-C8EF-7>

a dariah:Collection ;

dcterms:created "2017-12-04T19:25:31.028" ;

dcterms:creator "StefanFunk@dariah.eu" ;

dcterms:extent "436" ;

dcterms:format "text/vnd.dariah.dhrep.collection+turtle" ;

dcterms:identifier <http://dx.doi.org/10.20375/0000-000B-C8EF-7> , <http://hdl.handle.net/21.11113/0000-000B-C8EF-7> ;

dcterms:modified "2017-12-04T19:25:31.028" ;

dcterms:source "https://cdstar.de.dariah.eu/dariah/EAEA0-4CA2-C619-F79F-0" ;

premis:hasMessageDigest "be66b89927c01eb13a0f924f1f7de5a0" ;

premis:hasMessageDigestOriginator "dhcrud-base-7.36.0-DH.201712041055" ;

premis:hasMessageDigestType "md5" .

This metadata is describing the checksum for the object https://hdl.handle.net/21.11113/0000-000B-C8EF-7@data that is also checked in the Bagit manifest file. The corresponding PID record contains the checksum of the Bagit bag itself at index:5.

An additional component that regularly compares the object’s checksums and sizes (premis:hasMessageDigest and dcterms:extent) from different sources (see fig. 3) has been implemented. CDStar is already computing checksums for every stored object. Both attributes are checked regularly: (i) in the PID record (fig. 3,step [a]), (ii) in the object’s administrative metadata (fig. 3, step [b]), and finally (iii) recomputed from the objects themselves. By means of this, it is ensured that the object still exists and hasn’t been modified. If it occurs that there are differences in these attributes, according steps are initiated to determine the cause of the inconsistency, which can finally lead to an restore (fig. 3, step (c)) from the backup system.

Handling of Data Deletion and Tombstone Pages¶

The deletion of published objects is not generally designated and the internal deletion workflow only applies in case of

- a user wants to have a published object deleted because of a relevant private cause, or if the user or third parties assert copyright or other legal claims regarding a published object (see DARIAH-DE Terms of Use).

- Issues can be reported via DARIAH-DE support (support@de.dariah.eu) or directly to the DARIAH-DE Coordination Office (dco-de@de.dariah.eu). The DCO will then verify the issue, contact the author(s) and start the deletion process, in case the issue is valid.

Object data and metadata is then deleted from all the databases, the (administrative) Handle metadata will remain with a DELETED flag and a citation note only. At DOI or direct landing page access a tombstone page is provided, see example tombstone page.

Technical Infrastructure¶

The technical infrastructure of the DARIAH-DE Repository is provided by GWDG. The overall repository consists of various components. The actual repository software is running on virtual machines on the VMware-Cluster of GWDG. This virtualization cluster is composed of two nodes, which are positioned at two different locations in the city of Göttingen. Hence, in case of an outage at one node, it is ensured that the repository is still operating on the second node.

In addition to the productive virtual machine (VM) – repository.de.dariah.eu, there is also a second VM used for development (https://trep.de.dariah.eu) and a third for (user) testing and demonstration (https://dhrepworkshop.de.dariah.eu).

The virtualization environment also enables to dynamically and to efficiently distribute the underlying hardware resources to the repository. This ensures the repository to be continuously operated on a reliable and efficient infrastructure system, which also addresses increasing load due to increasing repository users. This also includes higher bandwidth to allow faster use of the infrastructure and best effort to provide around the clock connectivity. Since, the VM itself is monitored by GWDG, the utilization of the resources (CPU, RAM, Network Bandwidth) are tracked, it is possible to detect fully utilized resources and to increase the respective resource capacity.

Furthermore, all the virtual machines of the DARIAH-DE Repository are managed by a deployment management tool called Puppet, which ensures a consolidated operation and configuration of the overall repository. In principle, Puppet is used to define all the components composing the DARIAH-DE Repository and to ensure that all of them are in place (see dhrep puppet module: https://gitlab.gwdg.de/dariah-de-puppet/puppetmodule-dhrep).

DARIAH-DE Repository Components¶

In the following, there is a listing of all the components which form the overall architecture of the DARIAH-DE Repository:

- DARIAH-DE Publikator is the GUI and entry point to the DARIAH-DE Repository.

- Nginx, the proxy web-server on top of the underlying repository components which allows to keep the internal architecture of the DARIAH-DE Repository isolated from world wide access, which serves as a protection component and access point to all DARIAH-DE components

- DARIAH IdP (incl. DARIAH LDAP), regulates access via the federated identity provider Shibboleth (DARIAH-DE Publikator is accessible via Shibboleth Authentication)

- DARIAH PDP, is the Policy Decision Point for Authorization

- DH-oaipmh, as data provider for DARIAH-DE repository data access and delivery

- DH-crud, represents the data management-system for creating, updating, retrieving and deleting of data

- DH-pid, for creating and registering ePIC Handle and Datacite DOI PIDs

- DH-publish, manages publish processing and workflows

- ElasticSearch, one of the underlying technology of DH-oaipmh used for search functionalities of the repository (CR and GS)

- DARIAH-DE OwnStorage and PublicStorage

- CDSTAR providing OwnStorage and PublicStorage

- Tivoli Backup, the backup-system of the Repository

- Repository Collection Registry and Repository Search

- Collection Registry and Generic Search as part of the DARIAH-DE Research Data Federation Infrastructure

Monitoring and Handling of Incidents¶

Besides the monitoring of the basic technical infrastructure by the GWDG, all the above listed components are individually monitored by developers and maintainers of SUB and GWDG as responsible institutions of the Humanities Data Centre, where the DARIAH-DE Repository is located. All components and their dependencies are visualised in the DARIAH-DE Status Page Documentation. So outages and maintenance cycles can be spread and communicated to the users (see DARIAH-DE Status Page).

Fig. 5: A dependency chart of the DARIAH-DE Repository architecture and involved services

A detailed internal security plan ensures the quick re-operation of all components provided by the technical infrastructure for the functioning of the DARIAH-DE Repository. This plan includes clear procedures and responsibilities for an outage of essential services and has to be updated when ever new potential threads have been identified or the technical infrastructure has changed.

There are various possibilities which can lead to failures in the individual components of the repository. These possibilities can be categorized into two groups: First, sudden and unforeseen failures, always require exceptional handling, workflows in case of unexpected outages are clearly defined. Secondly, modifications and maintenance works can also lead to outages. Therefore, to minimize failures caused by the risk second category, there are also clear procedures defined for maintenance.

The most important component of the repository is constituted by the underlying storage system provided by GWDG. A failure in the storage system leads to a downtime of the entire repository.